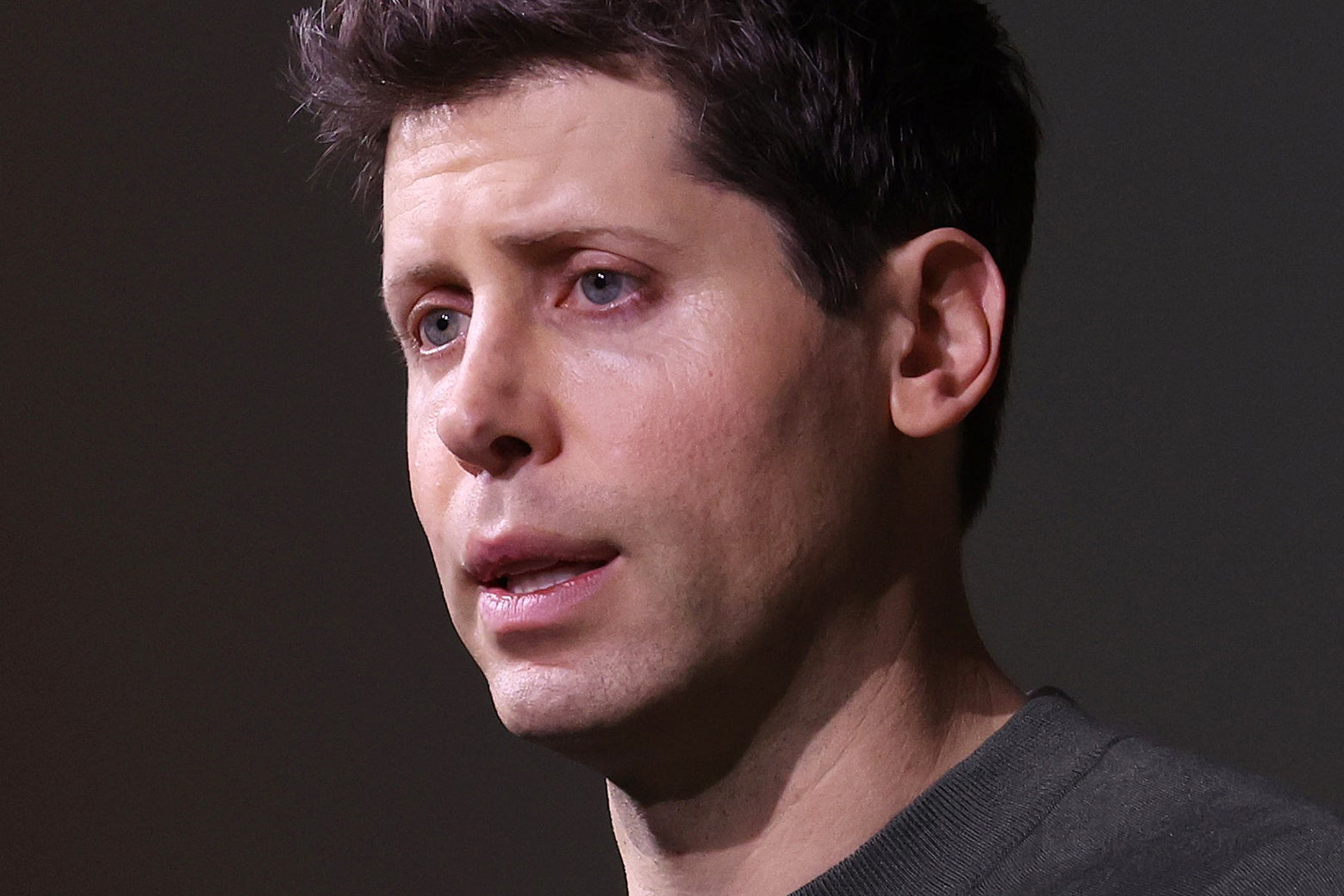

I Guess We’ll Just Have to Trust This Guy, Huh?

Reading Time: 7 minutesSam Altman now has more power—and fewer constraints—than ever., What Sam Altman can get away with at OpenAI now.

The deposed tech CEO returning to his company triumphant is enough of a Silicon Valley trope that they made it part of the HBO sitcom literally called Silicon Valley. Thomas Middleditch’s character wants to build a consumer-facing product, and his startup’s board of directors wants to sell to businesses, and Middleditch’s character gets fired and goes away until the board is ready to do what he wants. He comes back after a few weeks, probably, although it’s hard to say on account of it not being real. More famously, Steve Jobs left Apple in 1985 after a board struggle that resulted in his being pushed out. (Whether it was an outright firing is a subject of some debate.) Jobs needed 12 years, and Apple’s decision to buy a company he’d started in the meantime, to come home in 1997.

I’ve been thinking about that timing the past few days: Apple had Steve Jobs, and when Jobs beefed with his bosses, more than a decade had to elapse before he could run the company again. OpenAI had someone named Sam Altman, who was quite famous, but whom most people definitely had not heard of until a week and a half ago. OpenAI’s unusually structured board of directors decided it didn’t like Altman and fired him. Altman’s time in exile was five days.

The mechanics of Altman’s firing are particular and peculiar, and the precise rationale still isn’t certain beyond the broad strokes. But what does feel pretty clear is that Altman has juice. He’s accrued his influence because the employees at a potential $80 billion company threatened to walk out and make OpenAI something much less valuable if Altman weren’t around. That makes Altman a powerful man in tech, obviously. But the positioning of OpenAI in the artificial intelligence war between a bunch of the world’s most powerful companies makes him something more: a person regular people need to care about.

It’s a bummer, but we just might all be better off if Sam Altman is as capable a CEO and as swell a guy as OpenAI’s employees seem to think he is.

The big consensus from Altman’s firing and rehiring is that the rich guys won. (‘A.I. Belongs to the Capitalists Now,’ says the New York Times. ‘The Money Always Wins,’ says the Atlantic.) Meta, Google, and Microsoft were already in this business, and few people seriously thought before this past week that capitalist interests wouldn’t decide much of A.I.’s future direction. But OpenAI’s directors opened the door, however briefly in the end, for a tipping point. A nonprofit board was in charge of a for-profit (but capped-profit) company. Altman wanted OpenAI to grow. He wanted to take the sports car out on the highway and see if it could really purr, believing that amping up the business was either valuable for its own sake or necessary to unlock more of OpenAI’s potential. Four of the six members of OpenAI’s board, it seems, wanted the company to move more circumspectly. So few of the specifics of their tête-à-tête with Altman have become public that it’s hard to assess who’s right.

But it is clear who won (Altman) and which ideological vision (regular capitalism, instead of some earthy, restrained ideal of ethical capitalism) will carry the day. If Altman’s camp is right, then the makers of ChatGPT will innovate more and more until they’ve brought to light A.I. innovations we haven’t thought of yet. If the now-former members of the board (ousted as part of an agreement to bring Altman back) are right, we’ll get to watch Terminator unfold in real life. That has always been the debate over how quickly to move forward in the development of general artificial intelligence, exaggerated for effect. The significance of OpenAI shedding board members from the not-explicitly-for-profit camp is that the other big players in A.I. development were already on the other team. Any notion of OpenAI becoming some sort of enduring bulwark against capital’s domination of A.I. has gotten less realistic since the board took on Altman and lost. But a funny thing about this story is that while capitalism as a whole won out, no specific company did. That vacuum makes Altman, who already must feel quite important right now, even more important.

As the saga unfolded, the organizational chaos at OpenAI looked poised to become a knockout win for Microsoft. The computer giant already owned a huge piece of the for-profit part of OpenAI. It would keep owning that piece, whatever it was worth. But Microsoft was going to hire Altman and some enormous number of his OpenAI employees, extracting some of the most valuable assets from a company it already invested in (and could already use the intellectual property of). As Ben Thompson argued at Stratechery, Microsoft, in a way, was set to acquire OpenAI for nothing less than the cost of some OpenAI headcount, led by Altman. Microsoft would get OpenAI’s geniuses in a manner that raised no antitrust red flags—a valuable thing to a company that just fought regulators almost to the death for a big video game deal. Altman and all of Altman’s people would be Microsoft’s people, beholden to Microsoft’s objectives. Instead, after five days of employee engagement outrage, Altman returned to the big chair at OpenAI.

Altman expresses the same worries about A.I. that everyone has. (He has talked to Congress about a bunch of them, including the rapidly approaching car fire that is voting misinformation ahead of a U.S. presidential election.) Altman is of course pressing ahead anyway on raising lots of money for his A.I. business, but he at least talks like someone wrestling with grave questions about controlling the machines. (A more cynical and not unreasonable view is that, having gotten ahead, Altman would like competitors to have to cut through maximal red tape to catch up to what OpenAI has built.)

Executives at established tech giants might have their own honest scruples about where all of this is heading. Most of them were CS dorks before they ran the most valuable businesses in the world. They’ve spent a lot of time thinking about the ways their products might change society, if for no other reason than risk management. But nobody who’s serious believes their companies will, in the long run, go anywhere but toward the most profit. They have to do that, as public companies beholden to shareholders. (There is a compelling argument that because the biggest shareholders of big companies are diversified institutional investors and index funds that want the whole economy to do well, acting with some social responsibility to avoid wrecking the world is how big companies protect their shareholders. But that hasn’t seemed to catch on as a decision-making priority at big companies.)

A.I. is a special sector, though, because the public is skeptical of companies as stewards of this kind of world-changing technology. Republicans, Democrats, and independents all agree, according to Ipsos polling in July that said 83 percent of Americans ‘do not trust the companies developing A.I. systems to do so responsibly.’ The biggest companies are cognizant of this image problem, because they don’t live under rocks, and are dying to be seen as responsible social actors. The greatest coup that an army of Google public relations staffers ever pulled was getting 60 Minutes to visit the company’s campus and sit with a pensive-looking Sundar Pichai, who spoke soberly and eloquently about Google’s urgency in handling A.I. responsibly. CBS journalist Scott Pelley described Google as sitting somewhere in the ‘optimistic middle’ between A.I. doomsayers and utopians, and that was some of the best corporate communications work of the year. Microsoft’s Satya Nadella, Meta’s Mark Zuckerberg, and every other tech CEO of consequence has sought to come off the same way. They might all believe it, but they’re executing a branding campaign because they know that people are naturally inclined to think they’re going to destroy the world.

Altman has a good deal less public relations baggage, if for no other reason than that most people had no occasion to develop an opinion about him a few days ago. But more than any other single person, he’s been the face of the A.I. boom of the past year. He has come out of it with a pretty good reputation—one that may or may not last, but which had Microsoft eager to tout its association not just with OpenAI but with Altman specifically, and had OpenAI’s employees primed for mutiny when he was sacked. It is possible that in a cutthroat arms race to get a leg up in the business of A.I., there’s just one famous guy, Altman, who has both industry credibility and a public image that will make regular people trust his products.

One reason to follow Altman’s journey from here is that OpenAI is already a leader in a critical, burgeoning industry. The other is that, while there may not be a single person who could urge businesses to pump the brakes a bit on some future A.I. issue, Altman is a better bet than anyone else. With the board that tried to chasten him swapped out, Altman has a lot of leeway to make occasional principled stands while making zillions of dollars, or not. His peers at public companies are more constrained. (Another sign of Altman’s standing is that when he testified before Congress last spring, the lawmakers questioning him were actually questioning him. The hearing was not a soundbite-filled circus with politicians getting off easy dunks, because Altman isn’t yet the kind of unpopular lightning rod who would make that worthwhile.)

The letter those employees wrote to OpenAI’s old board of directors, demanding Altman’s reinstatement and threatening to quit, was interesting for what it said, but also what it didn’t. The employees were enraged by the method of Altman’s ouster and a perceived lack of board transparency. They showed immense loyalty to Altman but didn’t get specific about why. The letter mentioned a long list of stakeholders that deserved consideration. One was ‘the public,’ and one was ‘our mission.’ OpenAI’s stated mission is ‘to ensure that artificial general intelligence benefits all of humanity.’

The good interpretation of the employees’ letter is something along the lines of ‘OpenAI’s employees think Altman is a great boss, and they work at OpenAI to make a positive difference in the world, and they’ve taken a stand on Altman’s behalf so that he can usher in a benevolent artificial intelligence boom, or at least give society a fighting chance in a coming war against the machines.’ The bad interpretation is more cultish, to the effect of ‘Altman thinks he’s the smartest guy in the room, and everyone who works for him believes the same, and he’s a gold mine in the form of a human body, and the employees all have stock options and career aspirations.’ We may not know for a while which version is right.

Reference: https://slate.com/technology/2023/11/sam-altman-return-openai-power-board.html

Ref: slate

MediaDownloader.net -> Free Online Video Downloader, Download Any Video From YouTube, VK, Vimeo, Twitter, Twitch, Tumblr, Tiktok, Telegram, TED, Streamable, Soundcloud, Snapchat, Share, Rumble, Reddit, PuhuTV, Pinterest, Periscope, Ok.ru, MxTakatak, Mixcloud, Mashable, LinkedIn, Likee, Kwai, Izlesene, Instagram, Imgur, IMDB, Ifunny, Gaana, Flickr, Febspot, Facebook, ESPN, Douyin, Dailymotion, Buzzfeed, BluTV, Blogger, Bitchute, Bilibili, Bandcamp, Akıllı, 9GAG