What’s the Difference Between RAM and Cache Memory?

Reading Time: 5 minutesBoth RAM and Cache are fast primary memory. But what’s the difference between them?

If you are a technology enthusiast, you might have heard about caches and how they work with the RAM on your system to make it faster. But have you ever wondered what cache is and how it’s different from RAM?

Well, if you have, you are in the right place because we will look at everything that differentiates cache memory from RAM.

Get to Know the Memory Systems on Your Computer

Before we start comparing RAM to cache, it’s important to understand how the memory system on a computer is designed.

You see, both RAM and cache are volatile memory storage systems. This means that both these storage systems can temporarily store data and only work when power is supplied to them. Therefore, when you turn off your computer, all the data stored in the RAM and cache is deleted.

Due to this reason, any computing device has two different types of storage systems—namely, primary and secondary memory. The drives are the secondary memory on a computer system where you save your files, capable of storing data when the power is off. On the other hand, the primary memory systems supply data to the CPU when turned on.

But why have a memory system on the computer which can’t store data when it’s turned off? Well, there is a big reason why primary storage systems are quintessential for a computer.

You see, although the primary memory on your system is incapable of storing data when there’s no power, they are much faster when compared to secondary storage systems. Regarding numbers, secondary storage systems like SSDs have an access time of 50 microseconds.

In contrast, primary memory systems, such as random access memory, can supply data to the CPU every 17 nanoseconds. Therefore, primary memory systems are almost 3,000 times faster when compared to secondary storage systems.

Due to this difference in speeds, computer systems come with a memory hierarchy, which enables the data to be delivered to the CPU at astonishingly fast speeds.

Here is how data moves through the memory systems in a modern computer.

- Storage Drives (Secondary Memory): This device can store data permanently but is not as fast as the CPU. Due to this, the CPU cannot access data directly from the secondary storage system.

- RAM (Primary Memory): This storage system is faster than the secondary storage system but cannot store data permanently. Therefore, when you open a file on your system, it moves from the hard drive to the RAM. That said, even the RAM is not fast enough for the CPU.

- Cache (Primary Memory): To solve this problem, a particular type of primary memory known as cache memory is embedded in the CPU and is the fastest memory system on a computer. This memory system is divided into three parts, namely the L1, L2, and L3 cache. Therefore, any data which needs to be processed by the CPU moves from the hard drive to the RAM and then to the cache memory. That said, the CPU cannot access data directly from the cache.

- CPU Registers (Primary Memory): The CPU register on a computing device is minute in size and is based on the processor architecture. These registers can hold 32 or 64 bits of data. Once the data moves into these registers, the CPU can access it and perform the task at hand.

Understanding RAM and How It Works

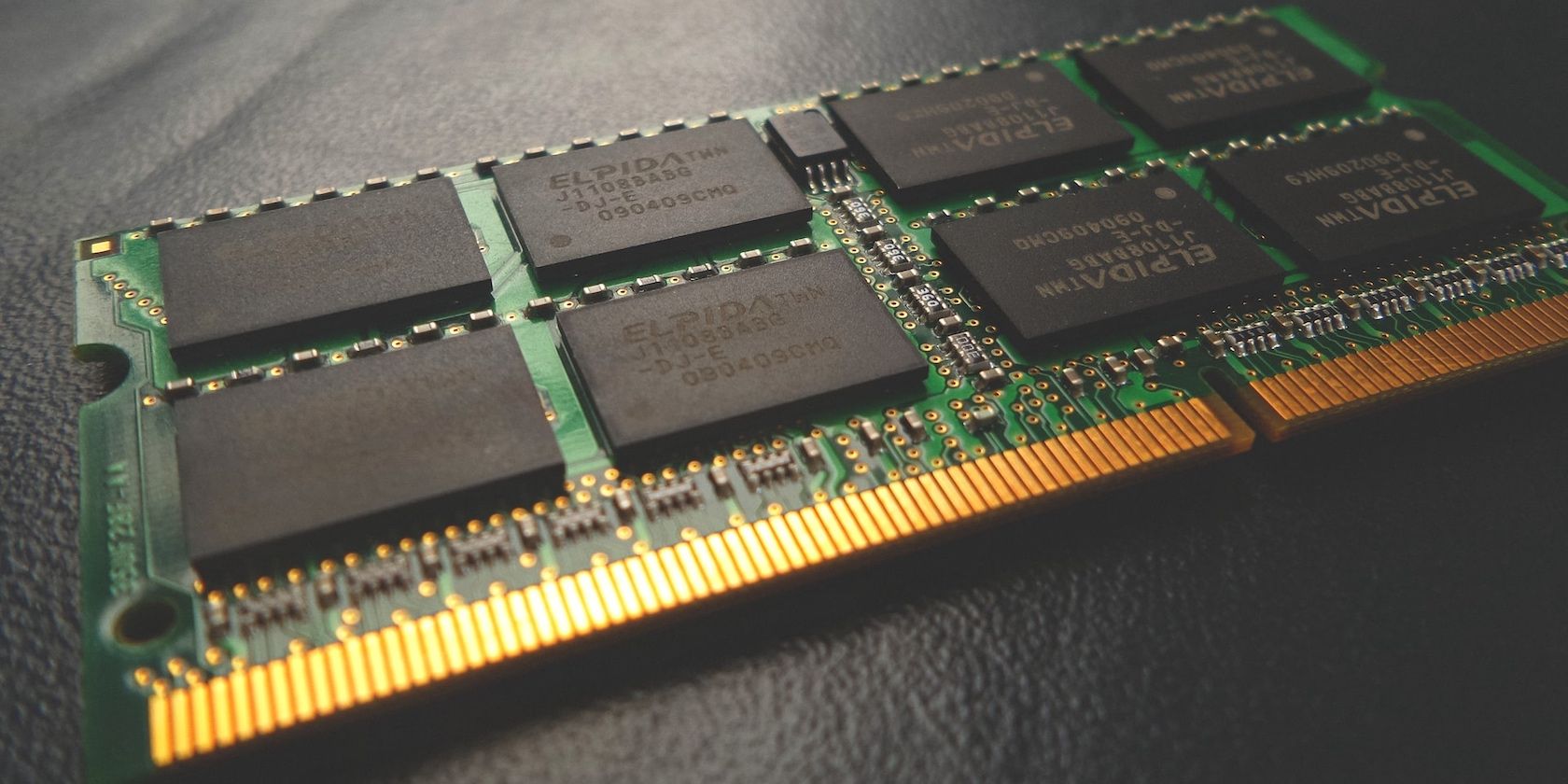

As explained earlier, the random access memory on a device is responsible for storing and supplying data to the CPU for programs on the computer. To store this data, random access memory uses a dynamic memory cell (DRAM).

This cell is created using a capacitor and a transistor. The capacitor in this arrangement is used to store charge, and based on the state of charge of the capacitor; the memory cell can either hold a 1 or a 0.

If the capacitor is fully charged, it is said to store a 1. On the other hand, when it’s discharged, it is said to store 0. Although the DRAM cell is capable of storing charges, this memory design comes with its flaws.

You see, as RAM uses capacitors to store charge, it tends to lose the charge it has stored in it. Due to this, data stored in the RAM can be lost. To solve this problem, the charge stored in the capacitors is refreshed using sense amplifiers—preventing the RAM from losing the stored information.

Although this refreshing of charges enables the RAM to store data when the computer is turned on, it introduces latency in the system as the RAM cannot transmit data to the CPU when it’s being refreshed—slowing the system down.

In addition to this, the RAM is connected to the motherboard, which is, in turn, connected to the CPU using sockets. Hence, there is a considerable distance between the RAM and the CPU, which increases the time data is delivered to the CPU.

Due to the reasons mentioned above, RAM only supplies data to the CPU every 17 nanoseconds. At that speed, the CPU can’t reach its peak performance. This is because the CPU needs to be supplied with data every quarter of a nanosecond to deliver the best performance when running on a turbo boost frequency of 4 Gigahertz.

To solve this problem, we have cache memory, another temporary storage system much faster than the RAM.

Cache Memory Explained

Now that we know about the caveats that come with RAM, we can look at cache memory and how it solves the problem which comes with RAM.

First and foremost, cache memory is not present on the motherboard. Instead, it is placed on the CPU itself. Due to this, data is stored closer to the CPU—enabling it to access data faster.

In addition to this, cache memory does not store data for all the programs running on your system. Instead, it only keeps data that is frequently requested by the CPU. Due to these differences, the cache can send data to the CPU at astonishingly fast speeds.

Furthermore, compared to RAM, cache memory uses static cells (SRAM) to store data. Compared to dynamic cells, static memory does not need refreshing as they don’t use capacitors to store charges.

Instead, it uses a set of 6 transistors to store information. Due to the use of transistors, the static cell does not lose charge over time, enabling the cache to supply data to the CPU at much faster speeds.

That said, cache memory, too, has its flaws. For one, it is much more costly when compared to RAM. Additionally, a static RAM cell is much larger when compared to a DRAM, as a set of 6 transistors is used to store one bit of information. This is substantially larger than the DRAM cell’s single-capacitor design.

Due to this, the memory density of SRAM is much lower, and placing a single SRAM with a large storage size on the CPU die is not possible. Therefore, to solve this problem, cache memory is divided into three categories, namely L1, L2, and L3 cache, and is placed inside and outside the CPU.

RAM vs. Cache Memory

Now that we have a basic understanding of RAM and cache, we can look at how they compare against one another.

Cache Memory Is Much Faster Than RAM

Both RAM and cache are volatile memory systems, yet both serve distinctive tasks. On the one hand, RAM stores the programs running on your system, while cache supports the RAM by storing frequently used data close to the CPU—improving performance.

Therefore, if you are looking for a system that offers great performance, it’s essential to look at the RAM and cache it comes with. An outstanding balance between both memory systems is quintessential to getting the most out of your PC.

Reference: https://www.makeuseof.com/difference-ram-and-cache-memory/

Ref: makeuseof