These Latest Celebrity Deepfakes Show How Advanced Scams Have Become

Reading Time: 5 minutesIt’s more important than ever to be vigilant and careful on the internet.

As long as the web has existed, this advice has rung true: ‘Don’t believe everything you see on the internet.’ Whether it’s a personal blog, a tweet, a YouTube video, or a TikTok, anyone can say anything on here, and it’s tough to know whether or not they’re right (or even telling the truth).

But we’re at an inflection point in internet literacy: Generative AI has reached a scary place, with tech good enough to mimic the likeness of celebrities and make these ‘clones’ say whatever they want. To those in the know, these deepfakes might not be convincing yet, but what about the average social media user? It seems we’re rapidly approaching a point where the general public will start to believe these fraudulent videos are real, and that’s a frightening thought.

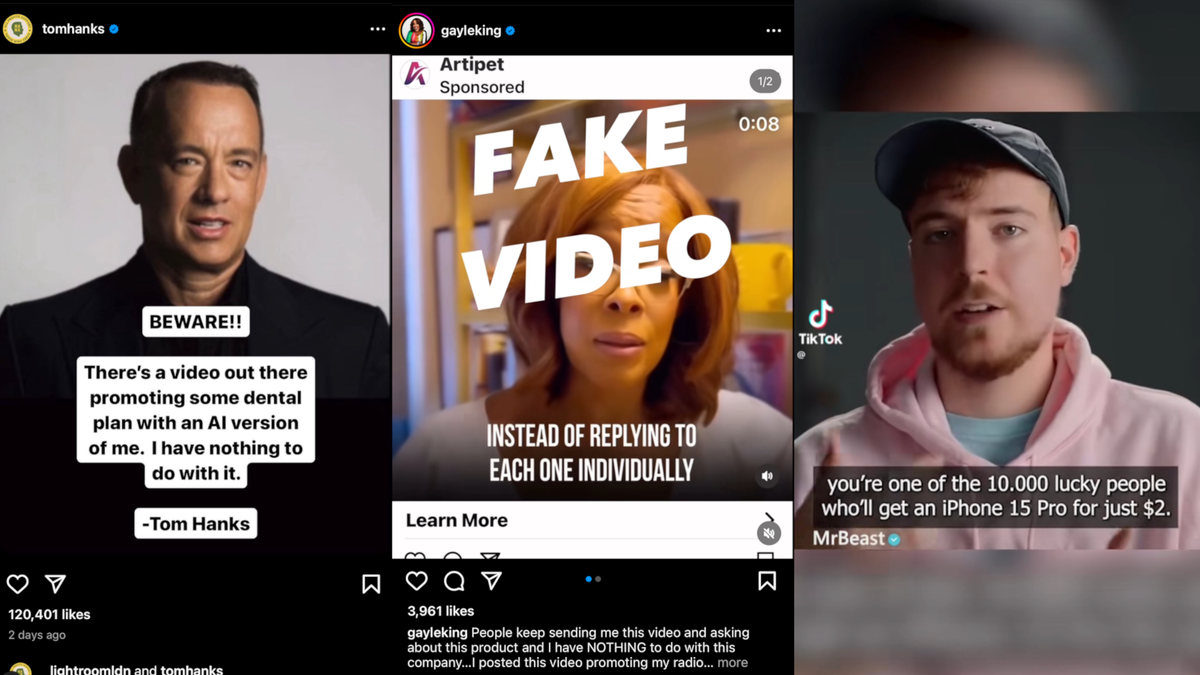

There are three high-profile examples of this just from the past week or so. The first is Tom Hanks: The actor posted a screenshot on his Instagram from a video promoting a ‘dental plan,’ with a spokesperson that looks like Tom Hanks if he had his teeth reinstalled. The video itself doesn’t appear to be public, and Hanks didn’t share it or the name of the company promoting it. But the assumption is some company or person made a deepfake of Tom Hanks to sell a dental product. As I mentioned, taking a good look at the image, you can already tell there’s something ‘off’ about his face, and had we been able to see the video, it’s likely that the motion would have the stiff, uncanny valley look that so many AI deepfakes have.

But Mr. Hanks was far from the only celebrity this week to deal with a deepfake issue. Gayle King, one of the anchors of CBS Mornings, posted a deepfake to her Instagram account, stating that people keep sending her the video and that she has nothing to do with it. This time, she shared the original video the deepfake is based on, an innocent video of King discussing having Bruce Springsteen on her show:

Keen observers will be able to tell the first video is fake: The lip movements don’t match up with the audio, and while the audio resembles King’s voice, it’s too stiff, as if she was pretending to be a bad actor while reading a script. I’m sure any of us attuned to these limitations of deepfakes and generative AI will notice the telltale signs right away, but I’m not convinced everyone will see this as immediately fake.

Third, internet sensation Mr. Beast is currently going viral for a deepfake advertisement that’s being shown to TikTok users, myself included. In it, ‘Mr. Beast’ congratulates the viewer for being among 10,000 users selected to win an iPhone 15 Pro for just two dollars. Lucky you!

The video in question is one of the better ones I’ve seen, although it also has its obvious issues. Whoever made this one took care to make Mr. Beast seem more expressive as he spoke, in an attempt to make the whole interaction seem more natural. I think that could be effective for some, but, again, it’s not 100%. Watching the video knowing its fake brings all the imperfections to the surface.

Even if you think it’s obvious these examples are fake, as Marques Brownlee says, this is the worst this tech is going to be. Deepfakes are only going to keep improving, aiming for the ultimate goal of being indistinguishable from real video.

If you’ve enjoyed any of the AI song covers that are blowing up all over the internet, you know how good the tech is getting. The voice actor for Plankton from Spongebob should rightly be concerned about how excellent these covers are, such as Plankton’s cover of Beggin‘. Frank Sinatra might have died when Dua Lipa was two, but this AI cover of him singing Levitating is a bop.

This tech can even translate your speech and dub over it in real time, in your voice. While there are a host of apps out there with this ability, even Spotify is testing it to translate podcasts in the hosts’ voices.

At this point, the best deepfakes are audio-only, and even then they still have their accuracy problems. But what happens when a bad actor can make a fake Mr. Beast ad that most people fall for? Imagine a truly convincing Mr. Beast saying directly to young and impressionable fans, ‘All you have to do to enter my giveaway is enter your banking information, so I can wire you the winnings directly.’ Maybe the ‘contest’ will be held inside a ‘Mr. Beast app,’ which actually installs malware on your device.

Of course, there are more frightening scenarios to consider. We’re approaching a presidential election next year. How good will deepfake technology get by November 2024? Will someone open TikTok before heading to the polls to watch a deepfake of Joe Biden saying it’s his ultimate goal to imprison his political enemies? Or maybe one of Donald Trump telling his supporters to show up armed to the polls?

Be diligent when watching videos online

Social media companies need to be more proactive about attacking these fake videos before they spread to others, but we also have a part to play in all this. We need to be careful, now more than ever, when casually scrolling and clicking around this great big internet of ours. Just because you see a video of a ‘celebrity’ saying something, or endorsing a product, doesn’t make it real—not anymore. Check the account it’s posted to diligently: If it’s supposedly the real personality, their account should be verified (unless we’re talking about a useless platform like X, in which case all posts should be treated as false unless unequivocally proven otherwise).

While we wait for deepfakes to get really good, there are still plenty of red flags that tell you when something is illegitimate. Eye and mouth movements will appear strange, for one. Look at that Mr. Beast video: While they tried their best to make him expressive, his eyes are pretty vacant for this first half of the clip. And while they matched the lip movements well, many deepfakes aren’t good at that yet.

Many of these videos appear in very poor quality as well. That’s because increasing the resolution reveals how janky the video is. Deepfakes rely on a real video of a person, whether its the celebrity or not, then overlay the celebrity’s face on top of that video and manipulate it to their liking. It’s pretty hard to do this in high resolution without blending issues, so you see layers clipping in and out of each other.

A healthy dose of skepticism goes a long way on the internet. Now that generative AI is taking over, dial up the skepticism as much as you can.

Reference: https://lifehacker.com/these-latest-celebrity-deepfakes-show-how-advanced-scam-1850895311

Ref: lifehacker

MediaDownloader.net -> Free Online Video Downloader, Download Any Video From YouTube, VK, Vimeo, Twitter, Twitch, Tumblr, Tiktok, Telegram, TED, Streamable, Soundcloud, Snapchat, Share, Rumble, Reddit, PuhuTV, Pinterest, Periscope, Ok.ru, MxTakatak, Mixcloud, Mashable, LinkedIn, Likee, Kwai, Izlesene, Instagram, Imgur, IMDB, Ifunny, Gaana, Flickr, Febspot, Facebook, ESPN, Douyin, Dailymotion, Buzzfeed, BluTV, Blogger, Bitchute, Bilibili, Bandcamp, Akıllı, 9GAG