Large language models can help home robots recover from errors without human help

Reading Time: 2 minutesThere are countless reasons why home robots have found little success post-Roomba. Pricing, practicality, form factor and mapping have all contributed to failure after failure. Even when some or all of those are addressed, there remains the question of what happens when a system makes an inevitable mistake.

This has been a point of friction on the industrial level, too, but big companies have the resources to address problems as they arise. We can’t, however, expect consumers to learn to program or hire someone who can help any time an issue arrives. Thankfully, this is a great use case for large language models (LLMs) in the robotics space, as exemplified by new research from MIT.

A study set to be presented at the International Conference on Learning Representations (ICLR) in May purports to bring a bit of ‘common sense’ into the process of correcting mistakes.

‘It turns out that robots are excellent mimics,’ the school explains. ‘But unless engineers also program them to adjust to every possible bump and nudge, robots don’t necessarily know how to handle these situations, short of starting their task from the top.’

Traditionally, when a robot encounters issues, it will exhaust its pre-programmed options before requiring human intervention. This is a a particular challenge in an unstructured environment like a home, where any numbers of changes to the status quo can adversely impact a robot’s ability to function.

Researchers behind the study note that while imitation learning (learning to do a task through observation) is popular in the world of home robotics, it often can’t account for the countless small environmental variations that can interfere with regular operation, thus requiring a system to restart from square one. The new research addresses this, in part, by breaking demonstrations into smaller subsets, rather than treating them as part of a continuous action.

This is where LLMs enter the picture, eliminating the requirement for the programmer to label and assign the numerous subactions manually.

‘LLMs have a way to tell you how to do each step of a task, in natural language. A human’s continuous demonstration is the embodiment of those steps, in physical space,’ says grad student Tsun-Hsuan Wang. ‘And we wanted to connect the two, so that a robot would automatically know what stage it is in a task, and be able to replan and recover on its own.’

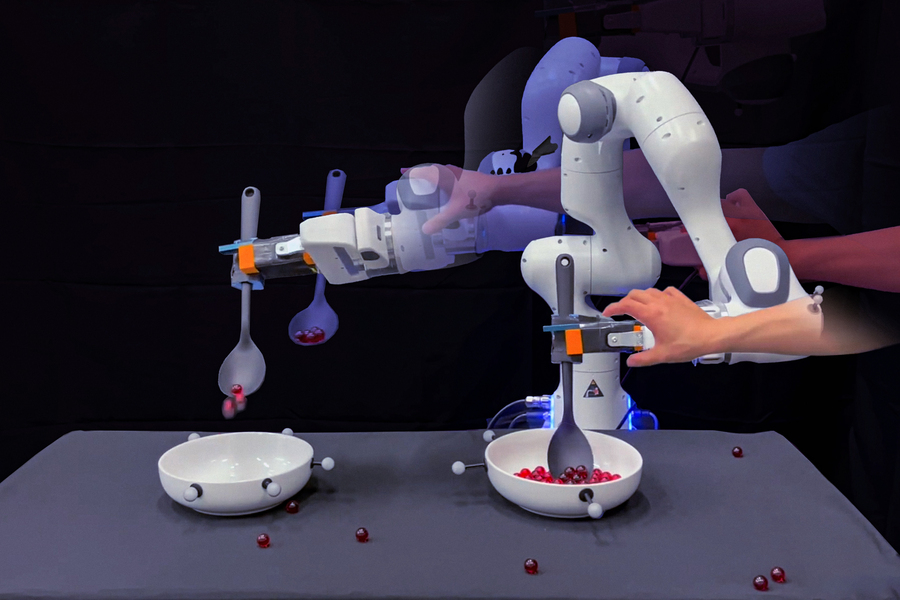

The particular demonstration featured in the study involves training a robot to scoop marbles and pour them into an empty bowl. It’s a simple, repeatable task for humans, but for robots, it’s a combination of various small tasks. The LLMs are capable of listing and labeling these subtasks. In the demonstrations, researchers sabotaged the activity in small ways, like bumping the robot off course and knocking marbles out of its spoon. The system responded by self-correcting the small tasks, rather than starting from scratch.

‘With our method, when the robot is making mistakes, we don’t need to ask humans to program or give extra demonstrations of how to recover from failures,’ Wang adds.

It’s a compelling method to help one avoid completely losing their marbles.

Ref: techcrunch

MediaDownloader.net -> Free Online Video Downloader, Download Any Video From YouTube, VK, Vimeo, Twitter, Twitch, Tumblr, Tiktok, Telegram, TED, Streamable, Soundcloud, Snapchat, Share, Rumble, Reddit, PuhuTV, Pinterest, Periscope, Ok.ru, MxTakatak, Mixcloud, Mashable, LinkedIn, Likee, Kwai, Izlesene, Instagram, Imgur, IMDB, Ifunny, Gaana, Flickr, Febspot, Facebook, ESPN, Douyin, Dailymotion, Buzzfeed, BluTV, Blogger, Bitchute, Bilibili, Bandcamp, Akıllı, 9GAG