Communication using thought alone? Unbabel unveils AI project to give us superhuman capabilities

Reading Time: 5 minutesSitting in a meeting room in a startup office in Lisbon, I silently typed the answer to a question only the person opposite would know the answer to. What kind of coffee had I asked for when I’d arrived at the office? A short moment later, without even moving or opening his mouth, the reply came back via a text message: ‘You had an Americano.’

This wasn’t how I’d expected to spend a Friday afternoon in the city, but here I was, sitting in the offices of enterprise language translation services startup Unbabel, opposite founder and CEO Vasco Pedro, testing what appeared to be a brain-to-computer interface. And it was pretty astounding.

The story begins four years ago.

Unbabel’s core mission – allowing enterprises to understand and be understood by their customers in dozens of languages — long ago led the company to think outside the proverbial ‘box’, to develop several projects in-house. It wanted to explore other ways to communicate. Now, as a startup with $90 million in VC funding, annual revenues of around $50 million and having survived the pandemic, Unbabel is doing well enough to explore these projects.

‘We had the idea of looking at brain-to-communication interfaces,’ Pedro tells me. ‘We started doing a bunch of experiments, like a 20% project.’

Unbabel’s innovation team is led by Paulo Dimas, VP of Product Innovation which looked into the way our brains evolved.

‘You have your limbic system, you have your neocortex. But they’ve actually evolved over millions of years. They’re actually separate systems. And I think what we’re starting to see is almost the creation of the ‘uber cortex’, which we think is going to be AI-powered, and it’s going to be existing outside of your biological brain,’ said Pedro.

Dimas and his team started to look into Electroencephalogram (EEG) systems, some of which can be invasive to the body. Elon Musk’s Neuralink company is famously exploring invasive brain-computer interface devices for humans.

EMG was the gateway

But then Unbabel’s team hit on the idea of using an EMG system. EMG (Electromyography) measures muscle response or electrical activity in response to a nerve’s stimulation of the muscle. EMG devices are commonplace and trivial. You can even buy them on Amazon for a few bucks.

‘What we realized was that EEG was still too noisy. We wanted to be non-invasive. But EMG, which measures muscle response, was so less noisy. You can more reliably capture some of the signals,’ said Pedro.

The team put sensors in an armband and started to work out what they could measure. ‘We began to think of EMG as a gateway to brain interaction directly,’ Pedro told me.

Then, last year, they decided to hook up an EMG system with Generative AI. Specifically, an LLM which was personalized to the user. But how?

Put simply, the system measured how the wearer of an EMG device would react when thinking of a word. This would help to build up a set of signals which correlated to real words. Feeding those signals into an LLM would mean the creation of a ‘personalized LLM’.

So when I asked Vasco what kind of coffee I’d asked for via an unseen text message, he was sent those words via an AI voice to his earbuds. He then thought of words like ‘Black coffee’. The LLM then matched his physical response to the word, checked if he meant ‘Americano’, again via the audio in an earbud, and then sent the answer to me via a text message – in this use case, the Telegram texting app.

‘The LLM expands what you’re saying. And then I confirm before sending it back. So there’s an interaction with the LLM where I build what I want it to say, and then I get to approve the final, message,’ explained Pedro.

The demonstration happened, in front of my eyes. There was no moving or typing. Just Vasco Pedro silently replying on text.

‘The LLM that takes a basic prompt and expands it into a fully-fledged answer, almost right away. I wouldn’t have time to type all of that in the natural way. So I’m using the LLM to do the heavy lifting on the response,’ he added.

He also pointed out that the wearer has absolute control of what they are outputting: ‘It’s not recording what I’m thinking. It’s recording what I want to say. So it’s like having a conversation. Other approaches, like Neuralink, are actually trying to measure subconscious interactions. We’re creating a channel that you can use to communicate, but the person has to want to use it.’

Pedro describes it as like having a voice inside your head you can communicate with: ‘The potential for augmentation is huge, but there’s a lot of hurdles still to overcome.’

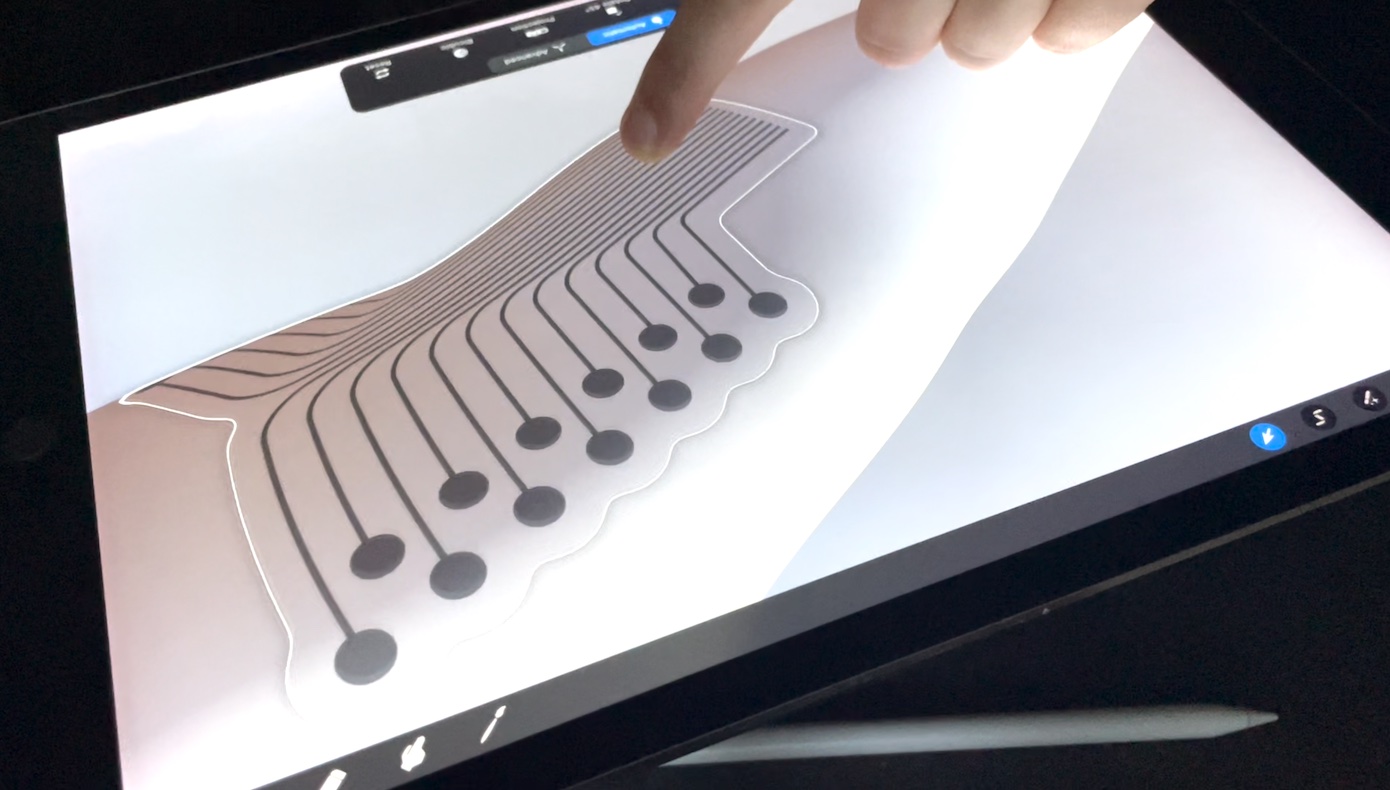

How does it work? The simple answer is an ‘E-Skin’ EMG interface embedded in a kind of flexible sleeve, developed with the Printed Microelectronics Laboratory at the University of Coimbra lead by Professor Tavakoli.

Right now the version is fairly hacked together, but eventually, the device could be miniaturized.

The birth of Halo

Unbabel dubbed its invention ‘Halo‘ (after ‘halogram’). An app runs on the wearer’s phone that enables access to a central hub for receiving the communication and enables communication with the LLM and responses. The platform is pulling the Open AI ChatGPT 3.5 right now.

How Unbabel’s Halo device works.

Pedro likens Unbabel’s project to driverless car companies hacking together data from normal cameras rather than complicated systems, like Lidar: ‘We’re gonna get a shit tonne of data, and we can start using it now. We started working four years ago and the tipping point is now in terms of generative AI. This is the moment when this is going to accelerate.’

Admittedly, this isn’t the first time EMG has been used to control a computer and generate responses.

For instance, a device made by Facebook-oned CTRL-labs had an EMG wristband in 2019 that picked up on electrical impulses that come from muscle fibers as they move.

However, Unbabel’s approach appears as if it may be the first time an LLM has been hooked up to EMG in this way. The applications could be far-reaching.

Unlocking the locked-in

Unbabel is now working with the Champalimaud Foundation in Lisbon, which works on advanced biomedical research and interdisciplinary clinical care in the field of ALS, among many other things. Clearly, though, the system could end up being used in other scenarios, such as Cerebral Palsy.

The need for better interfaces for patients who cannot speak is ongoing. Right now, so-called ‘Alternative and Augmentative Communication’ (AAC) products for ALS sufferers, such as Grid or Tobii, rely on eye-tracking. These systems often require a frustrating calibration process for the user, are really only workable indoors, and can be fatiguing to the user. They also depend on laboriously slow keyboards.

As Pedros adds: ‘Our prototype is already being endorsed by the major ALS association in Portugal. We plan to start deploying this to our first ALS users by Xmas this year. Beyond ALS patients, our current product is also relevant for other patients that struggle to type.’

Dimas is also now Unbabel’s appointee to Portugal’s newly formed Center for Responsible AI, where he is CEO. This is a partnership with several Portuguese startups and research centers to invest €78 million in AI research, creating 210 jobs under the Portuguese Recovery and Resilience Plan. Partners include Feedzai, Sword Health, Champalimaud Foundation, and others.

Generative AI is coming to wearable devices

Meanwhile, the version of Halo demonstrated to me showed the potential power for Generative AI applied to wearable devices. Other teams are exploring this brave new world. Just this week neuroscientists were able to recreate Pink ‘Floyd’s Another Brick in the Wall, Part 1′ using AI to decipher the brain’s electrical activity.

The concept has been around for a long time. In the 1980s, the Firefox movie, directed by and starring Clint Eastwood, posited a world where pilots would control weapons systems via thought-controlled platforms:

But this is only the first version of Unbabel’s Halo: ‘It’s still fairly limited to what we can do, but we’re already at around 20 words per minute of equivalent communication,’ said Pedro.

‘To give you a sense of this, Stephen Hawking’s was communicating at around two words per minute. Halo is now at around 20 words per minute. Consumer-use level is 60, and 80 is the target. People talk at a maximum of 120 to 130 words per minute. So if you get to 150, you’re starting to get to superhuman capabilities.’

Ref: techcrunch

MediaDownloader.net -> Free Online Video Downloader, Download Any Video From YouTube, VK, Vimeo, Twitter, Twitch, Tumblr, Tiktok, Telegram, TED, Streamable, Soundcloud, Snapchat, Share, Rumble, Reddit, PuhuTV, Pinterest, Periscope, Ok.ru, MxTakatak, Mixcloud, Mashable, LinkedIn, Likee, Kwai, Izlesene, Instagram, Imgur, IMDB, Ifunny, Gaana, Flickr, Febspot, Facebook, ESPN, Douyin, Dailymotion, Buzzfeed, BluTV, Blogger, Bitchute, Bilibili, Bandcamp, Akıllı, 9GAG