CentML lands $27M from Nvidia, others to make AI models run more efficiently

Reading Time: 3 minutesContrary to what you might’ve heard, the era of large seed rounds isn’t over — at least in the AI sector.

CentML, a startup developing tools to decrease the cost — and improve the performance — of deploying machine learning models, this morning announced that it raised $27 million in an extended seed round with participation from Gradient Ventures, TR Ventures, Nvidia and Microsoft Azure AI VP Misha Bilenko.

CentML initially closed its seed round in 2022, but extended the round over the last few months as interest in its product grew — bringing its total raised to $30.5 million.

The fresh capital will be used to bolster CentML’s product development and research efforts in addition to expand the startup’s engineering team and broader workforce of 30 people spread across the U.S. and Canada, according to CentML co-founder and CEO Gennady Pekhimenko.

Pekhimenko, an associate professor at the University of Toronto, co-founded CentML last year alongside Akbar Nurlybaev and Ph.D. students Shang Wang and Anand Jayarajan. Pekhimenko says that they shared a vision of creating tech that could increase access to compute in the face of the worsening AI chip supply problem.

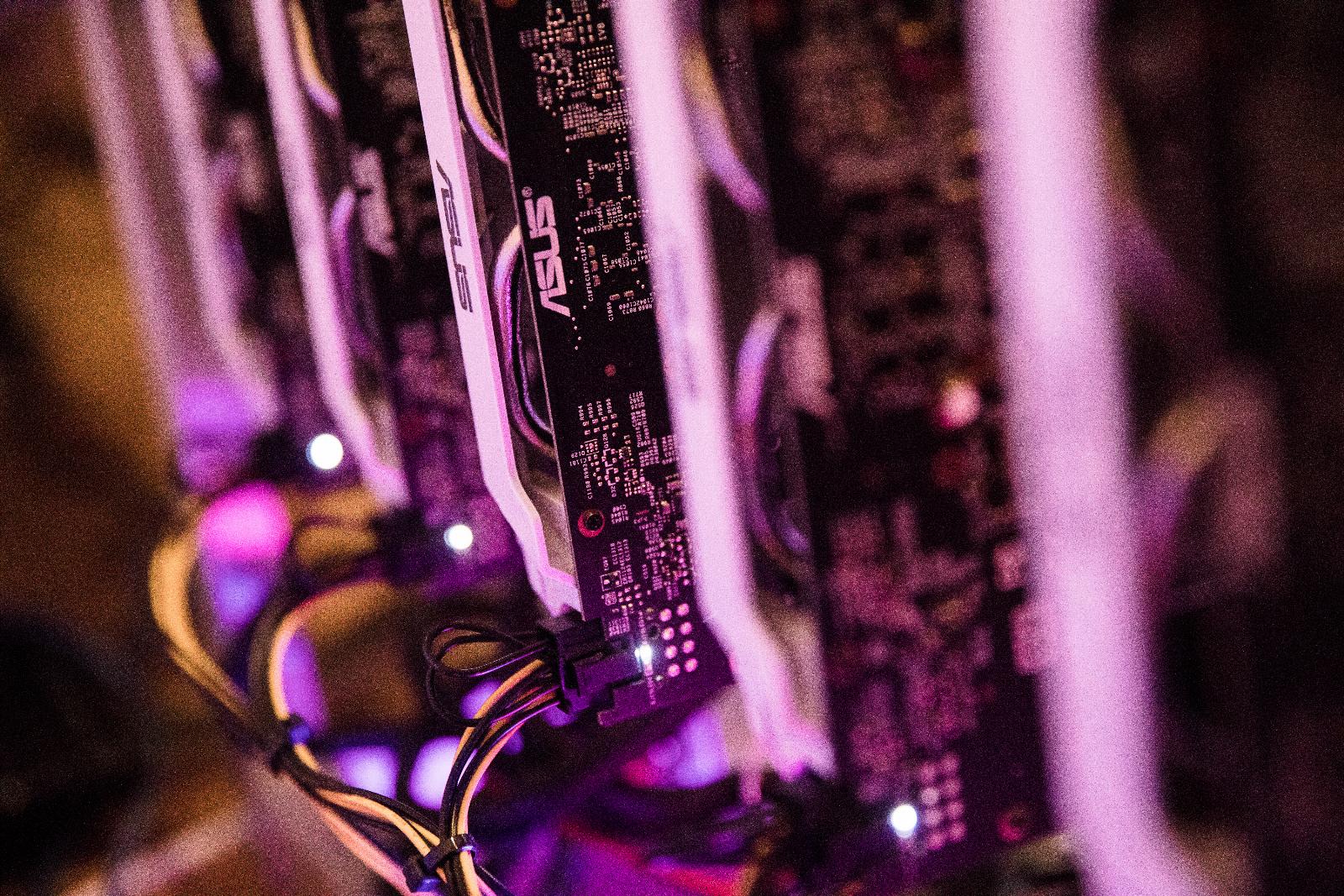

Most companies training models, particularly generative AI models like ChatGPT and Stable Diffusion, rely heavily on GPU-based hardware. GPUs’ ability to perform many computations in parallel make them well-suited to training today’s most capable AI.

But there’s not enough chips to go around.

Microsoft is facing a shortage of the server hardware needed to run AI so severe that it might lead to service disruptions, the company warned in a summer earnings report. And Nvidia’s best-performing AI cards are reportedly sold out until 2024.

That’s led some companies, including OpenAI, Google, AWS, Meta and Microsoft, to build — or explore building — their own custom chips for model training. But even this hasn’t proven to be a panacea. Meta’s efforts have been beset with issues, leading the company to scrap some its experimental hardware. And Google hasn’t managed to keep pace with demand for its cloud-hosted, homegrown GPU equivalent, the tensor processing unit (TPU), Wired reported recently.

With spending on AI-focused chips expected to hit $53 billion this year and more than double in the next four years, according to Gartner, Pekhimenko felt the time was right to launch software that could make models run more efficiently on existing hardware.

‘Training AI and machine learning models is increasingly expensive,’ Pekhimenko said. ‘With CentML’s optimization technology, we’re able to reduce expenses up to 80% without compromising speed or accuracy.’

That’s quite a claim. But at a high level, CentML’s software is relatively easy to make sense of.

The platform attempts to identify bottlenecks during model training and predict the total time and cost to deploy a model. Beyond this, CentML provides access to a compiler — a component that translates a programming language’s source code into machine code that hardware like a GPU can understand — to automatically optimize model training workloads to perform best on target hardware.

Pekhimenko claims that CentML’s software doesn’t degrade models and requires ‘little to no effort’ for engineers to use.

‘For one of our customers, we optimized their Llama 2 model to work 3x faster by using Nvidia A10 GPU cards,’ she added.

CentML isn’t the first to take a software-based approach to model optimization. It has competitors in MosaicML, which Databricks acquired in June for $1.3 billion, and OctoML, which landed an $85 million cash infusion in November 2021 for its machine learning acceleration platform.

But Pekhimenko asserts that CentML’s techniques don’t result in a loss of model accuracy, like MosaicML’s can sometimes do, and that CentML’s compiler is ‘newer generation’ and more performant than OctoML’s compiler.

In the near future, CentML plans to turn its attention to optimizing not only model training but inference — i.e. running models after they’ve been trained. GPUs are heavily used in inference today as well, and Pekhimenko sees it as a potential avenue of growth for the company.

‘The CentML platform can run any model,’ Pekhimenko said. ‘CentML produces optimized code for a variety of GPUs and reduces the memory needed to deploy models, and, as such, allows teams to deploy on smaller and cheaper GPUs.’

Ref: techcrunch

MediaDownloader.net -> Free Online Video Downloader, Download Any Video From YouTube, VK, Vimeo, Twitter, Twitch, Tumblr, Tiktok, Telegram, TED, Streamable, Soundcloud, Snapchat, Share, Rumble, Reddit, PuhuTV, Pinterest, Periscope, Ok.ru, MxTakatak, Mixcloud, Mashable, LinkedIn, Likee, Kwai, Izlesene, Instagram, Imgur, IMDB, Ifunny, Gaana, Flickr, Febspot, Facebook, ESPN, Douyin, Dailymotion, Buzzfeed, BluTV, Blogger, Bitchute, Bilibili, Bandcamp, Akıllı, 9GAG